Modern full-stack engineering isn’t confined to just coding a front-end and back-end — it’s about bridging the user interface (UI), DevOps processes, and artificial intelligence (AI) into one cohesive, resilient system. A full-stack engineer today might design an Angular UI, implement a Node.js API, set up CI/CD automation, and even integrate an AI-powered feature — all with an eye toward scalability and reliability.

This article explores a holistic approach to resilient system design, touching every layer from the Angular frontend to the Node backend, with DevOps and AI as glue that binds them. We’ll use examples, code snippets, and diagrams to illustrate how these pieces come together. The content is geared to be accessible to general developers while also delving into details that senior engineers and DevOps architects expect.

Let’s start by examining the front-end and user experience, because a resilient system ultimately must delight the user even in adverse conditions.

Resilient UI/UX With Angular Frontend

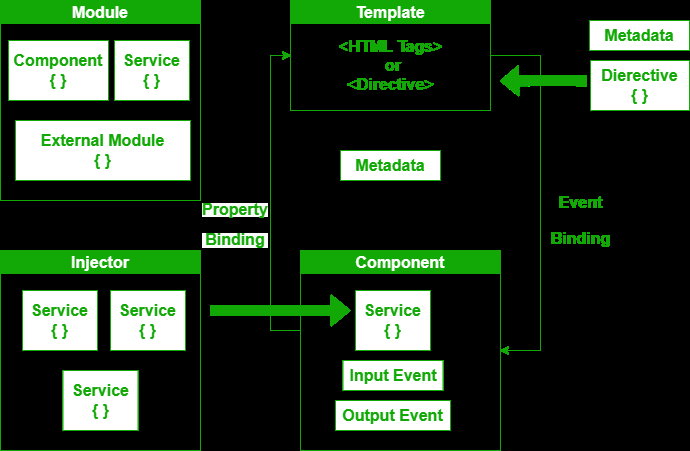

A resilient system begins in the browser. Angular, as a Google-backed framework built in TypeScript, provides a strong foundation for building robust single-page applications. Its architecture encourages clear separation of concerns and reusability. Full-stack engineers leverage these Angular features to create UIs that can withstand failures gracefully.

1. Graceful Error Handling

No matter how reliable the backend, network requests can fail or return errors. A resilient UI anticipates this. In Angular, developers might use HttpClient with RxJS operators to implement retries or graceful error handling on service calls.

For example, if a data fetch fails, the UI can catch the error and display a user-friendly message with a “Retry” option instead of a blank screen. This embodies the “fail fast, recover gracefully” principle. A simple snippet using RxJS could look like:

this.apiService.getData().pipe(

retry(2), // retry twice on failure

catchError(err => {

this.errorMessage = "Oops! Something went wrong. Please try again.";

return EMPTY; // graceful fallback

})

).subscribe(data => this.items = data);2. Loading States and Offline Support

Resilient UIs also account for slow or no connectivity. Techniques like screens (placeholder content that mimics the layout of real data) keep users engaged during loading, avoiding frustration from blank pages. Angular’s declarative templates make it easy to swap in a loading component while data is in transit.

Furthermore, as a full-stack engineer, you might implement Progressive Web App (PWA) capabilities in Angular, using Service Workers for caching and offline access. This way, if the network is down, the app can still serve some functionality or at least inform the user gracefully. An offline-first Angular app can cache critical API responses and static assets, so the user’s last known data or a meaningful offline page is shown.

3. User Flow Robustness

Designing with user flows in mind helps preempt edge cases in the UI. A user flow diagram can outline every step a user takes and is a great tool to identify where things might go wrong. By mapping these out, full-stack teams ensure there are no dead ends in the UI.

In fact, user flowcharts become an important artifact for communicating how the app should respond, even when the “happy path” is disrupted. They serve as an illustrated guide for the team, showing the steps a user goes through and how the system should respond at each step.

For example, if an external payment service is down, the flow diagram would reveal the need for a fallback screen informing the user and perhaps logging the issue for DevOps to monitor. This proactive UX planning is as vital to resilience as any server-side fix.

4. Performance and UX Under Load

Resilience isn’t only about failure; it’s also about handling high loads smoothly. On the UI side, this might involve using efficient change detection in Angular, virtualization for large lists, and caching of data on the client to reduce repeated backend calls.

A full-stack engineer might coordinate with DevOps to use a Content Delivery Network (CDN) for serving the Angular app’s static assets, reducing load on the origin and speeding up client loads globally. All these measures contribute to a UI that remains responsive and user-friendly under stress.

Robust Node.js Backend and DevOps Pipeline Integration:

On the server side, Node.js offers a fast, event-driven runtime that is excellent for I/O-intensive workloads typical of web APIs. However, a single Node process is single-threaded by nature, so resilient system design often entails scaling out or using Node’s clustering to utilize multiple CPU cores.

In our full-stack scenario, the Node backend might serve RESTful APIs consumed by the Angular frontend, perform server-side rendering for SEO, or act as a BFF that aggregates microservice responses for the UI. Designing this layer for resilience involves both application-level patterns and infrastructure-level practices.

1. Resilience Patterns in Node.js

Even in a well-built Node application, failures happen — a database might go down or an external API might timeout. Borrowing concepts from reactive systems, we implement patterns such as Retry, Circuit Breaker, and Bulkheads to make the API more robust.

Retry

The backend can automatically retry transient failures. For instance, if a payment gateway call fails due to a network glitch, the Node service can retry after a short delay. A library like axios-retry(for HTTP calls) can be configured to attempt a few retries with exponential backoff. As an example, one could wrap an external API call with a retry logic:

const axios = require('axios');

const axiosRetry = require('axios-retry');

axiosRetry(axios, { retries: 3, retryDelay: axiosRetry.exponentialDelay });

async function fetchWithRetry() {

try {

const response = await axios.get('https://api.example.com/data');

return response.data;

} catch (err) {

console.error('Failed to fetch data after retries:', err.message);

return null; // fallback or null

}

}Circuit Breaker

This pattern prevents a service from repeatedly trying an operation that is likely to fail. Using a circuit-breaker library like Opossum in Node, we can wrap calls so that after N failures, further calls fail immediately for a cool-off period. This protects the system from cascading failures, akin to an electrical circuit breaker flipping off to avoid damage. When the circuit is open, we can have a fallback. Circuit breakers improve overall system responsiveness under failure by not tying up resources with doomed requests.

Bulkhead and Isolation

In microservices or multi-feature systems, the bulkhead pattern localizes failures. For example, if one route in the Node API is slow or error-prone, it should ideally not exhaust the thread pool or memory such that other routes (/auth or /payments) also break. In Node, strategies include separating concerns into different services (microservices) or at least using separate Node clusters/processes for isolation. One could run multiple Node processes and ensure the load balancer or orchestrator can detect and replace unhealthy instances.

2. Scalable Architecture (Monolith vs. Microservices)

A full-stack engineer must decide on an architecture that aligns with resilience and scaling needs. A monolithic Node app is simpler to start with and can be resilient if properly containerized and scaled. However, as features grow, a microservices architecture can offer stronger resilience by decoupling services. For example, an e-commerce system might split into separate Node services: user-service, product-service, order-service, each with its own database.

This way, if the product-service goes down, the user still might log in and browse cached product info, and other services remain unaffected (bulkhead concept at the architecture level). Each microservice can be scaled or restarted independently. The downside is the added complexity in orchestration and DevOps, which we’ll address through container orchestration later. The choice often comes down to team size, expertise, and specific uptime requirements. Notably, large systems like Netflix or Amazon follow microservices for both scalability and fault isolation — one service failing rarely takes down the whole platform.

3. CI/CD Pipeline: From Code to Deployment

DevOps is the thread that weaves UI and backend into a reliable delivery mechanism. A well-designed continuous integration and continuous deployment (CI/CD) pipeline is essential for resilient systems because it reduces human error and ensures consistency. Let’s consider how our Angular + Node application might be built, tested, and deployed in an automated pipeline.

Continuous Integration (CI)

When a developer pushes code (front-end or back-end) to the repository, a CI pipeline triggers automatically. For example, using a platform like GitHub Actions or Jenkins, the pipeline will run builds and tests. In the Angular app, it will run ng build --prod and execute unit tests and perhaps end-to-end tests with Protractor or Cypress. For the Node API, it might run npm test for unit tests. CI ensures that new code doesn’t break existing functionality.

In a resilient setup, the CI step also includes linting and static code analysis to catch quality issues early. For example, an Azure DevOps pipeline might lint, restore dependencies, run all tests, and even perform security checks on each commit. Only if all checks pass will it proceed to packaging.

Build Artifacts and Containerization

After the tests pass, the pipeline produces build artifacts. For Angular, this is a set of static files (HTML, JS, CSS) ready to be served. For Node, it could be a bundled app or, commonly, a Docker image containing the Node application.

Containerization is crucial for consistency across environments — “it works on my machine” issues are mitigated when the same Docker image runs in development, staging, and production environments. Our pipeline can build a Docker image for the Node API and another for a web server that will serve the Angular app, or we might use a single image that serves static files via Node.

Continuous Deployment (CD)

With artifacts ready, the CD part takes over to deploy to environments. A typical flow is to deploy first to a staging environment (or test server) automatically. Infrastructure-as-Code tools can define how to deploy our containers.

Once in staging, the pipeline may run integration tests or smoke tests — for example, calling a health-check endpoint of the Node API and loading the Angular app to ensure basic functionality. Only after validation will there be a promotion to production. In many setups, promotion might be manual or require human approval for extra safety.

Blue-Green or Canary Deployments

For zero-downtime and risk mitigation, DevOps architects often employ blue-green deployments. Our pipeline could deploy the new version of the Node+Angular stack in parallel and then switch traffic gradually from the old to the new. If something goes wrong, traffic can quickly revert to the stable version. This strategy greatly increases the resilience of deployment — a bad release need not become a user-facing outage.

4. Infrastructure and Operations

Beyond CI/CD, resilience is enforced by how we host and monitor our system. In a cloud environment, we might deploy our Node.js microservices and Angular static site on a platform like Kubernetes or AWS ECS. Container orchestration adds another layer of self-healing and scaling.

For instance, Kubernetes will automatically restart a Node container if it crashes, and can leverage readiness probes to avoid sending traffic to an unhealthy instance. It’s designed with self-healing capabilities to maintain the desired state, for example, replacing failed containers and rescheduling them on healthy nodes. This means even if our application encounters a transient failure, the orchestration layer can often recover from it without human intervention.

Integrating AI: Intelligent Features and AIOps

No modern tech discussion is complete without AI. In full-stack resilient systems, AI plays two broad roles: enhancing the user experience and improving operations. A full-stack engineer with AI knowledge can bring powerful capabilities to both the application and its infrastructure.

AI in Backend and DevOps (AIOps)

AI-driven tooling on the backend enhances resilience through smarter monitoring and automation. Machine learning models analyze logs, metrics, and traces to detect anomalies or regressions before they propagate into outages. Time-series forecasting and clustering can drive predictive autoscaling and capacity planning, provisioning resources just ahead of demand spikes.

Integrating AI into CI/CD pipelines automates code analysis, test generation, and security scanning at scale. Advanced AIOps platforms correlate events and pinpoint root causes, enabling faster incident response. Even resilience patterns, such as circuit breakers, can be AI-tuned: for example, ML could dynamically adjust failure thresholds or trigger automated rollbacks under anomalous conditions.

Continuous Improvement and AI Assistants

AI closes the feedback loop for continuous improvement. Production telemetry — including performance metrics, usage patterns, and error logs — feeds into retraining models and refining heuristics, guiding iterative feature and performance enhancements. Developers increasingly rely on AI assistants (GitHub Copilot, Tabnine, or AI-powered IDE plugins) for context-aware code suggestions, bug detection, and automated refactoring.

Natural-language analysis tools can review pull requests for style, documentation, or security issues. Low-code/no-code AI platforms and automated MLOps services let teams prototype and deploy new models or analytics with minimal coding. Together, these practices accelerate release cycles and embed data-driven learning into every update.

Edge AI for Resilience

Edge AI pushes intelligence to the network perimeter, enhancing autonomy and uptime. IoT and edge devices can run inference locally using TensorFlow Lite, ONNX Runtime, or dedicated accelerators like Google’s Edge TPU.

For example, an embedded model on a factory sensor might detect equipment anomalies and trigger a local shutdown without cloud interaction. On-device inference reduces reliance on connectivity and central servers — only critical alerts or aggregated summaries are sent upstream. In distributed edge clusters or 5G/MEC deployments, this decentralization keeps core functions running even if parts of the network fail. By avoiding single points of failure, Edge AI helps sustain operations under adverse conditions.

Conclusion

Bridging UI, DevOps, and AI is about creating systems that are more than the sum of their parts. We started with the Angular UI, ensuring the user interface remains robust through design and technical measures. We connected it to a Node.js backend engineered with resilience patterns and maintained via solid DevOps practices. Then we wove in AI, which can elevate both user experience and operational stability.

The full-stack engineer’s approach to resilient systems means thinking cross-disciplinary: When writing a front-end feature, consider how a CI pipeline will test it and how it will handle failures; when deploying a new service, consider how the user will perceive any downtime or errors. We visualized how code travels from a Git commit to a running system and how each piece fits into a fault-tolerant architecture. UI/UX flow charts guided us in designing for the user’s journey, even when things go wrong. Code snippets illustrated concrete techniques to implement resilience at the code level.